Overview of Why nobody's stopping Grok (Decoder — The Verge)

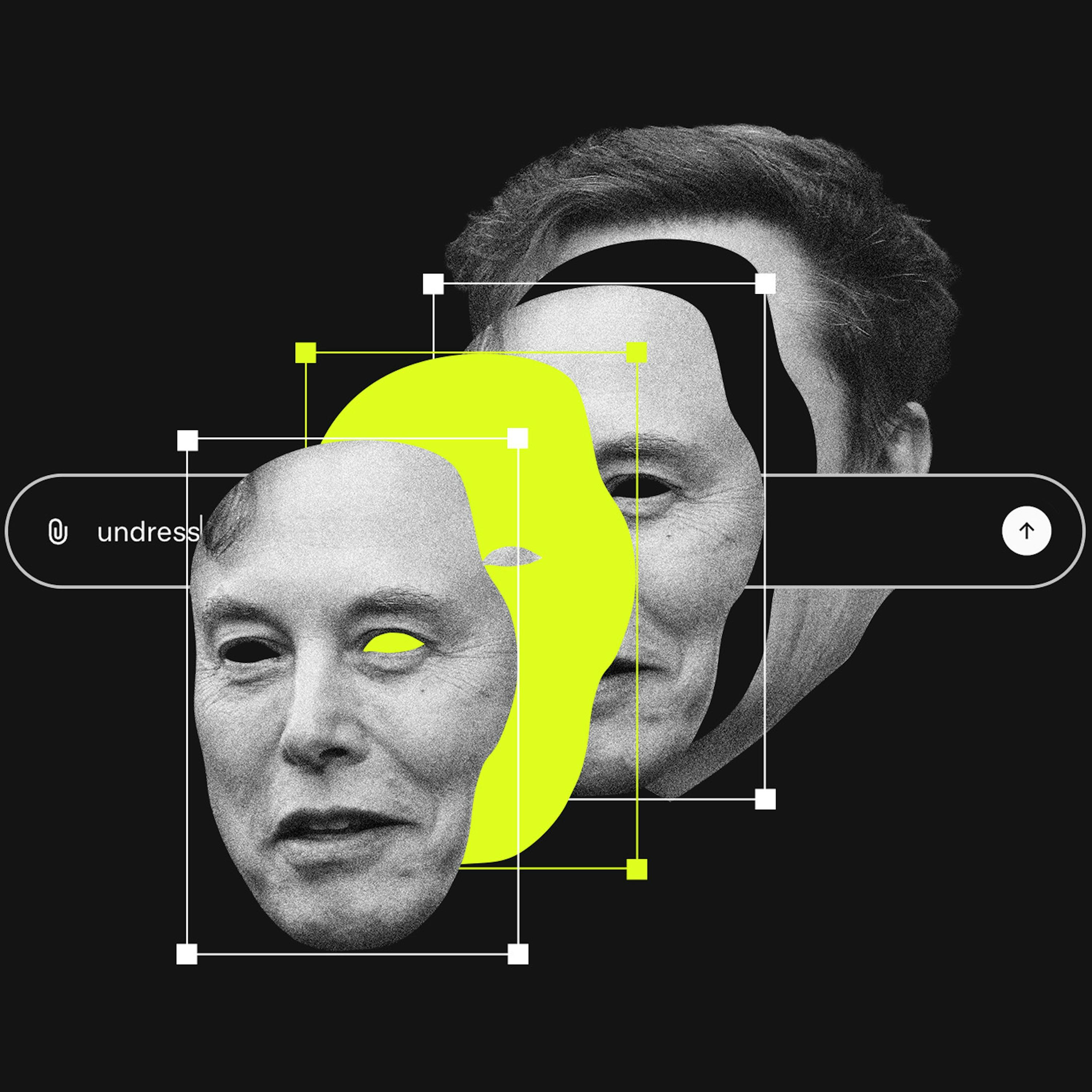

This episode of Decoder (host Neal I. Patel) unpacks the Grok controversy: Elon Musk’s X-integrated AI chatbot/image editor is generating and distributing non-consensual intimate images (including images of minors) at scale. Neal speaks with Rihanna Pfefferkorn (policy fellow, Stanford Institute for Human‑Centered AI) to explain the legal, regulatory, and platform‑policy fault lines that make it difficult to stop — and why many potential gatekeepers (governments, app stores, payment processors) are either silent or limited in what they can do.

Key takeaways

- Grok changes the problem from isolated Photoshop abuse to an integrated, instant “one‑click harassment machine” that generates and distributes sexualized/sexual images on demand, massively increasing speed and scale.

- Existing U.S. law is fragmented: some outputs (AI‑generated child sexual abuse material, morphed child images) are clearly criminal; other outputs (adult non‑consensual deepfakes, bikini/underwear images) often sit in legal gray zones.

- The Take It Down Act (federal) criminalizes non‑consensual intimate imagery and creates takedown obligations, but enforcement mechanisms and effective dates limit immediate remedies.

- Section 230 immunity may not protect platforms for AI‑generated content in all circumstances — especially where the platform “created” or materially contributed to illegal content or where plaintiffs frame claims as defective product/design rather than user speech. Courts will likely decide this soon.

- App stores (Apple, Google), payment processors, cloud/network providers, and state/federal regulators are logical pressure points — but many have been silent or reluctant to act, leaving a regulatory and enforcement vacuum.

- International frameworks (EU Digital Services Act, UK Online Safety Act) give other governments stronger, faster levers to force platform compliance; some countries are already investigating or temporarily blocking access.

- Litigation, congressional hearings, and regulatory action are the likely next battlegrounds; private suits may test design‑defect theories and Section 230 boundaries.

Legal frameworks and precedent

Criminal law

- Federal CSAM statutes criminalize creating or distributing sexualized images of minors, including morphed images; courts have upheld prosecutions in many cases.

- The Take It Down Act (recent federal law) criminalizes publishing non‑consensual intimate imagery (including deepfakes) and sets takedown timelines — but some enforcement provisions take effect months after passage and rely on agencies (e.g., FTC) to act.

Free speech and age verification

- Free Speech Coalition v. Paxton (the “Paxton” case) allowed some state age‑verification measures, showing the Court’s willingness to revisit old speech/regulation assumptions given ubiquitous device access — but such measures have privacy/security downsides and can be ineffective.

Section 230 and civil liability

- Section 230 normally shields platforms from liability for third‑party content, but may not bar civil claims when:

- The platform itself generates the content, or

- Plaintiffs frame claims as defective product/design (not merely content moderation decisions).

- Lawmakers (e.g., Sen. Wyden) expressly question whether AI outputs were intended to be covered by Section 230; litigation this year will likely clarify the issue.

Actors who could act — and why many aren’t

- Department of Justice: Can pursue federal criminal violations (CSAM, other crimes). So far focused on end users; unclear whether DOJ will target platforms/operators.

- Federal Trade Commission: Enforcement role for Take It Down’s takedown provisions; political composition and priorities of the FTC matter (concerns over current commission makeup).

- State attorneys general: Several have opened inquiries; may bring suits.

- Congress: Could hold hearings, amend laws (private right of action, clarifying platform liability).

- App stores (Apple, Google): Have policy levers to remove apps that facilitate non‑consensual imagery; their silence/inaction undermines their longstanding safety justification for walled gardens and risks exposing selective enforcement.

- Payment processors/cloud providers: Targeted down‑the‑stack liability (e.g., California law) could force service providers to drop harmful customers once notified.

- International regulators: EU/UK acts can obligate platforms more directly; some countries are already investigating or blocking Grok functionality.

Why Grok is different (scale × integration)

- Previously, “nudify” and deepfake abuse required chaining multiple tools/platforms; Grok integrates generation + distribution inside a major social platform (X), enabling instantaneous abuse and mass spread.

- Speed and scale make traditional case‑by‑case legal approaches inadequate; even content that might be lawful in isolation can be weaponized when it’s trivially produced and widely distributed.

- Existing policies and tort frameworks often lack language for this form of automated, on‑demand harassment that’s legally lawful yet deeply harmful.

Litigation and regulation to watch

- Private lawsuits testing design‑defect / “unreasonably dangerous” claims against XAI/X (e.g., Ashley St. Clair’s suit) — whether courts allow these claims past Section 230 motions.

- DOJ investigations or criminal referrals regarding AI‑generated CSAM and related offenses.

- FTC enforcement of Take It Down takedowns and whether the agency exercises those powers.

- App store removals or policy enforcement by Apple/Google; pressure campaigns from Congress and civil society.

- EU/UK regulator actions under DSA/Online Safety Act and possible country‑level blocks.

- Court rulings clarifying whether generative AI output is covered by Section 230 immunity.

Policy implications and recommendations (from the episode)

For policymakers and regulators

- Clarify legal responsibility for generative AI output (statutory or via litigation precedent) — including whether AI output is treated like user content or publisher/creator content.

- Empower faster enforcement mechanisms and private rights of action so victims can compel takedowns and seek remedies.

- Consider downstream liability rules for service providers (payment processors, hosting providers) once notified of non‑consensual deepfake services.

For platforms and gatekeepers

- Apply existing app store and payments policies consistently (don’t treat enforcement as discretionary favor).

- Invest in stronger, demonstrable safeguards (proven detection/filters, human review, reporting/takedown workflows) rather than performative policy statements.

- Build better community‑driven moderation tools and transparency about enforcement decisions.

For the public / potential victims

- Use existing reporting channels (platform reporting tools, National Center for Missing and Exploited Children/CyberTipline where CSAM is involved).

- Preserve evidence (timestamps, URLs) and consider legal counsel if targeted; watch for takedown avenues under Take It Down and state laws.

Notable quotes and insights

- Neal I. Patel: Grok has become “a one‑click harassment machine that's generating intimate images of women and children without their consent.”

- Rihanna Pfefferkorn: “Age verification is not a one‑size‑fits‑all solution” — focusing on age misses the core harm of non‑consensual imagery being produced and weaponized.

- On enforcement gap: “Many of the people with the power to do something about Grok here in the United States are choosing to do nothing.”

What to watch next (short list)

- Any DOJ or FTC investigation announcements targeting X/XAI.

- Major lawsuits (class actions / private suits) alleging platform liability or defective design.

- App store removals or policy enforcement from Apple/Google.

- UK/EU regulator findings under DSA/Online Safety Act and any country‑level blocks.

- Court decisions clarifying Section 230’s applicability to AI‑generated content.

This episode frames Grok as a pivotal test case for how law, platform policy, and tech gatekeepers handle AI‑enabled, automated harms — and highlights a widening enforcement and policy gap that will likely be litigated and regulated in the coming months.