Overview of Reality is losing the deepfake war (Decoder — The Verge)

This episode of Decoder (host Eli Patel) features Verge reporter Jess Weatherbed and examines whether metadata/labeling systems can preserve a shared sense of reality as AI-generated and AI-manipulated photos and videos proliferate. The conversation centers on C2PA (the provenance/“content credentials” approach pushed by Adobe and partners), why these systems are failing in practice, the mixed incentives that slow or block adoption, and what might realistically happen next.

Main takeaways

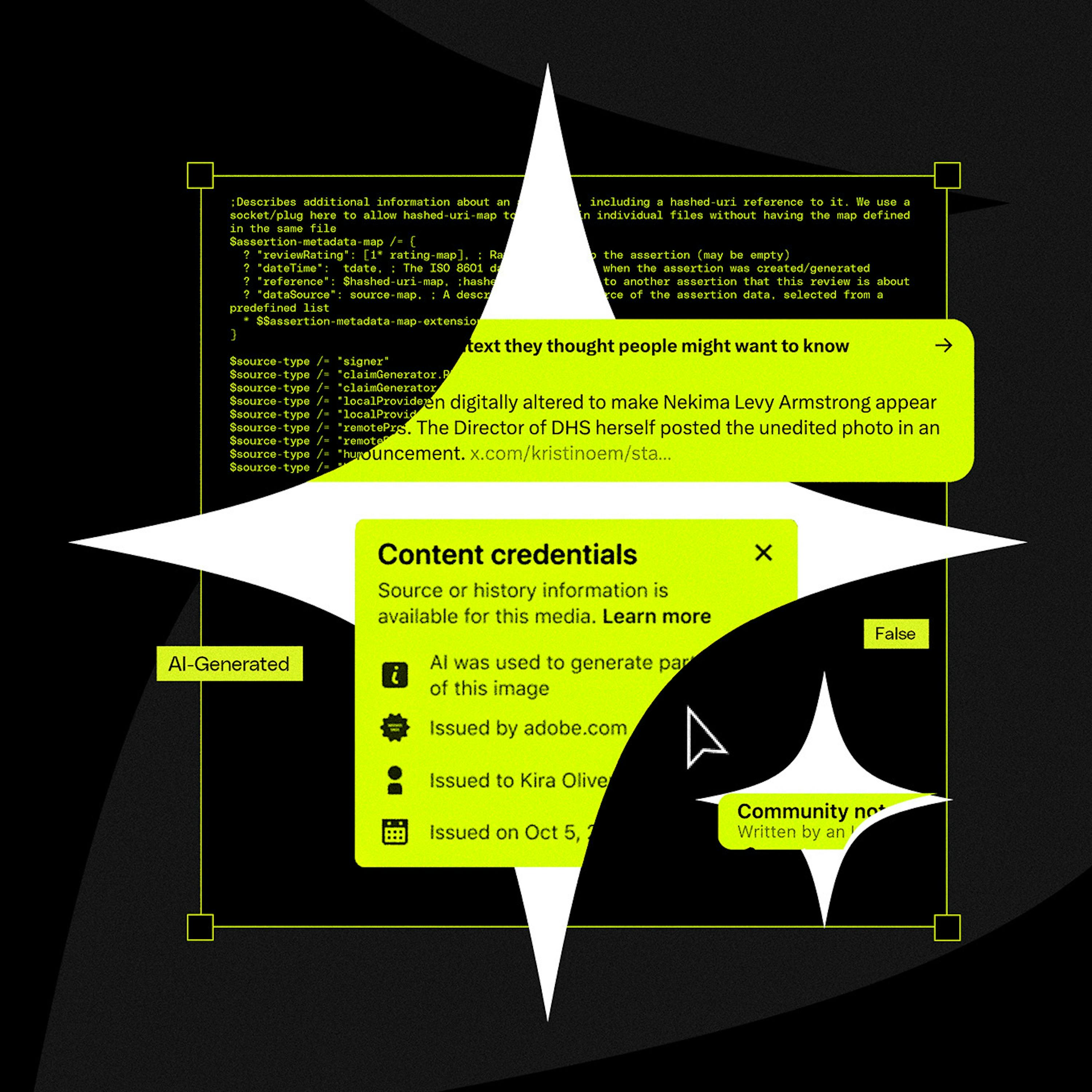

- C2PA/content-credential approaches were designed for provenance (who created/edited a file and how), not as a foolproof AI-detection system; they are therefore a poor fit for the scale and threat of modern deepfakes.

- Technical limitations (metadata can be stripped or lost during uploads), inconsistent platform adoption, and unclear product/communication choices have rendered labeling schemes largely ineffective at restoring public trust.

- Big tech firms and platforms have conflicting incentives: they’re both major AI developers and the largest distributors of content, making them reluctant or slow to adopt labeling that could reduce engagement or revenue.

- Bad-faith actors (including state actors) and underground tools outside major vendors’ ecosystems further undermine any centralized labeling standard.

- The realistic path forward will likely include regulatory intervention, more targeted provenance for trusted uses (press, agencies, verified creators), and continued fragmentation — but no quick universal fix.

What is C2PA and how it was supposed to work

- C2PA (part of a broader content-authenticity effort; Adobe and others helped create the ecosystem) is a metadata/provenance standard intended to record capture and editing steps at creation (camera, time, tools used).

- Idea: metadata ("content credentials") travels with the file so platforms and viewers can see provenance or an “AI used” indicator without manual effort.

- Alternatives/adjacent approaches: Google’s SynthID (embedded watermark), inference detectors (models that analyze media for likely AI artifacts). These methods are complementary in concept but differ in guarantees and failure modes.

Why labeling systems are failing in practice

- Metadata is fragile: uploads, platform processing, screenshots and re-encodings often strip or corrupt metadata. Even proponents admit it can be removed easily in practice.

- Partial adoption: only some phones/cameras and a subset of platforms read or preserve credentials. Without near-universal adoption the benefits collapse.

- Misaligned design goals: C2PA was never built to be the single arbiter of “real vs fake” at global scale; it’s oriented to provenance for creators and workflows.

- Communication and UX: platforms that do display labels struggle with deciding what to show, how to explain it, and how to weigh degrees of AI editing. Labels often anger creators and confuse consumers.

- Incentives: platforms monetize volume and engagement; widespread labeling can devalue the content that drives engagement and ad revenue. Some platforms have pulled back after experimenting (e.g., Instagram’s label rollout backlash).

- Bad-faith actors and nonconsenting governments: actors (including government agencies) releasing AI images intentionally worsen the trust problem; platforms aren’t consistently policing or labeling these cases.

Who’s involved and current adoption landscape

- Adobe / Content Authenticity Initiative: originator and loudest advocate for content credentials; useful for provenance in creative workflows.

- C2PA coalition (industry steering members include some major vendors): many big names are members in principle (Microsoft, Google, OpenAI, Qualcomm noted in conversation), but public commitment and active adoption vary.

- Google: SynthID watermarking + Pixel embedding; YouTube has partial labeling but inconsistent coverage.

- Apple: Not publicly committed; reportedly cautious and largely on the sidelines.

- Meta / Instagram: public statements (Adam Massari) acknowledging a pivot to “starting with skepticism” about media faithfulness; experiments with labels but pullbacks after creator backlash.

- X/Twitter, TikTok, YouTube: patchy or inconsistent implementations; some platforms strip metadata in uploads or fail to surface it reliably.

- Camera makers (Sony, Nikon, Leica, etc.): some engagement, but limited retrofitting/backdating for existing camera hardware is a practical blocker.

- Stock/photo agencies: Shutterstock is involved; a “trusted middleman” model (press images verified by agencies) would help but isn’t widely implemented.

Social and cultural implications

- The era of “trust your eyes” is ending: platforms and some executives are publically acknowledging that photos/videos should no longer be trusted by default.

- Creators and audiences react strongly to AI labels: creators fear devaluation of their work; audiences get confused or angry; labeling can catalyze social friction.

- Civic risk: the spread of politically salient deepfakes (including by government institutions) magnifies harm and erodes democratic information flows.

- Friction for everyday UX: “What counts as AI?” is increasingly unclear because many editing tools include AI assistance (e.g., HDR stacking, night mode, content-aware edits) that creators might not perceive as AI.

Notable quotes from the episode

- Adam Massari (Instagram): “For most of my life, I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case… we’re going to move from assuming what we see is real by default to starting with skepticism.”

- Jess Weatherbed: C2PA “was designed as more of a photography metadata standard, not an AI-detection system,” and in practice “has failed” as the single solution for the current scale of deepfakes.

Potential next steps / realistic solutions

- Expect regulation: governments may compel minimum provenance, disclosure, or platform responsibilities (e.g., Online Safety-style laws), because voluntary industry coordination has been insufficient.

- Trusted pipelines for critical media: enforce provenance for press/official imagery via verified agencies, contractual requirements, or centralized verification services (e.g., stock/press middlemen).

- Better platform practices: platforms need consistent, robust ingestion and preservation of provenance data and clearer UX for users — but that requires willingness to reduce ambiguous engagement-driven content.

- Hybrid defenses: combine embedded signals (watermarks, C2PA credentials), inference-based detection, and human-in-the-loop review for high-risk content.

- Continued fragmentation: expect multiple standards and partial solutions to co-exist — there will be no single universal fix in the near term.

What to watch next

- Regulatory moves in the US, UK, EU around AI content provenance and platform responsibility.

- Apple’s position: whether iPhone will adopt provenance/watermarking in-camera (huge leverage if they do).

- Platform behavior changes: whether major platforms adopt uniform policies for preserving/reading content credentials or if commercial incentives block progress.

- Camera manufacturers’ firmware/backdating options for embedding credentials on older devices.

- Adoption by news agencies and stock photo services to create “trusted” verified streams.

Recommended, concise verdict

Labeling and provenance standards like C2PA can help in narrow, provenance-oriented contexts (creative workflows, press verification), but they will not by themselves restore a universally trusted media ecosystem. Technical fragility, inconsistent adoption, commercial incentives, and bad-faith actors mean the “deepfake war” is being lost at scale unless regulations and new trusted pipelines step in — and even then, solutions will be partial and contested for years.